Updating Control Center to release 1.9.0

This section includes procedures for updating Control Center 1.7.0 or 1.8.0 to release 1.9.0.

The following list outlines recommended best practices for updating Control Center deployments:

- Review the release notes for this release and relevant prior releases. The latest information is provided there.

- On delegate hosts, most of the update steps are identical. Use screen, tmux or a similar program to establish sessions on each delegate host and perform the steps at the same time.

- Review and verify the settings in delegate host configuration files

(

/etc/default/serviced) before starting the update. Ideally, the settings on all delegate hosts are identical, except on ZooKeeper nodes and delegate hosts that do not mount the DFS. - Review the update procedures before performing them. Every effort is made to avoid mistakes and anticipate needs; nevertheless, the instructions may be incorrect or inadequate for some requirements or environments.

Updating 1.7.0 or 1.8.0 to 1.9.0

- Download the required files

- Stage Docker image files:

- Update the master host:

- Update the delegate hosts:

- Start Control Center:

- Perform post-upgrade procedures:

Downloading Control Center files for release 1.9.0

Use this procedure to download required files to a workstation, and then copy the files to the hosts that need them.

To perform this procedure, you need:

- A workstation with internet access.

- Permission to download files from delivery.zenoss.io Customers can request permission by filing a ticket at the Zenoss Support site.

- A secure network copy program.

Follow these steps:

-

In a web browser, navigate to delivery.zenoss.io, and then log in.

-

Download the self-installing Docker image files.

install-zenoss-serviced-isvcs:v69.runinstall-zenoss-isvcs-zookeeper:v14.run

-

Download the Control Center RPM file.

serviced-1.9.0-1.x86_64.rpm

-

Updates only: Download data migration images and a script.

install-zenoss-serviced-isvcs:v67.run(updates from 1.7.0 only)install-zenoss-serviced-isvcs:v68.runmigrate_cc_services_data.sh

-

Identify the operating system release on Control Center hosts. Enter the following command on each Control Center host in your deployment, if necessary. All Control Center hosts should be running the same operating system release and kernel.

cat /etc/redhat-release -

RHEL 8.4+ only: Download the containerd RPM file from Docker:

curl -#O https://download.docker.com/linux/centos/7/x86_64/stable/Packages/containerd.io-1.2.13-3.1.el7.x86_64.rpm -

Download the RHEL/CentOS repository mirror file for your deployment. The download site provides repository mirror files containing the packages that Control Center requires. For RHEL 8.3, use the 7.9 file.

-

yum-mirror-centos7.2-1511-serviced-1.9.0.x86_64.rpm -

yum-mirror-centos7.3-1611-serviced-1.9.0.x86_64.rpm -

yum-mirror-centos7.4-1708-serviced-1.9.0.x86_64.rpm -

yum-mirror-centos7.5-1804-serviced-1.9.0.x86_64.rpm -

yum-mirror-centos7.6-1810-serviced-1.9.0.x86_64.rpm -

yum-mirror-centos7.8.2003-serviced-1.9.0.x86_64.rpm -

yum-mirror-centos7.9.2009-serviced-1.9.0.x86_64.rpm

-

-

Optional: Download the Zenoss Pretty Good Privacy (PGP) key, if desired. You can use the Zenoss PGP key to verify Zenoss RPM files and the

yummetadata of the repository mirror.-

Download the key.

curl --location -o /tmp/tmp.html 'https://keyserver.ubuntu.com/pks/lookup?op=get&search=0xED0A5FD2AA5A1AD7' -

Determine whether the download succeeded.

grep -Ec '^\-\-\-\-\-BEGIN PGP' /tmp/tmp.html- If the result is 0, return to the previous substep.

- If the result is 1, proceed to the next substep.

-

Extract the key.

awk '/^-----BEGIN PGP.*$/,/^-----END PGP.*$/' /tmp/tmp.html > ./RPM-PGP-KEY-Zenoss

-

-

Use a secure copy program to copy the files to Control Center hosts.

- Copy all files to the master host.

-

Copy the following files to to all delegate hosts:

- RHEL/CentOS RPM file

- containerd RPM file

- Control Center RPM file

- Zenoss PGP key file

-

Copy the Docker image file for ZooKeeper to delegate hosts that are ZooKeeper ensemble nodes.

Staging Docker image files on the master host

Before performing this procedure, verify that approximately 640MB of temporary space is available on the file system where /root is located.

Use this procedure to copy Docker image files to the Control Center master host. The files are used when Docker is fully configured.

-

Log in to the master host as root or as a user with superuser privileges.

-

Copy or move the archive files to /root.

-

Add execute permission to the files.

chmod +x /root/*.run

Staging a Docker image file on ZooKeeper ensemble nodes

Before performing this procedure, verify that approximately 170MB of

temporary space is available on the file system where /root is

located.

Use this procedure to add a Docker image file to the Control Center delegate hosts that are ZooKeeper ensemble nodes. Delegate hosts that are not ZooKeeper ensemble nodes do not need the file.

-

Log in to a delegate host as root or as a user with superuser privileges.

-

Copy or move the

install-zenoss-isvcs-zookeeper-v*.runfile to/root. -

Add execute permission to the file.

chmod +x /root/*.run

Update the master host

The following procedures are to be performed on the master host of a multi-host deployment or on the single host in a single-host deployment.

The procedures for updating a delegate host can be found below.

Installing the repository mirror

Use this procedure to install the Zenoss repository mirror on a Control Center host. The mirror contains packages that are required on all Control Center hosts. Repeat this procedure on each host in your deployment.

-

Log in to the target host as root or as a user with superuser privileges.

-

Move the RPM files and the Zenoss PGP key file to /tmp.

-

Upgrades only: Remove the existing repository mirror, if necessary.

-

Search for the mirror.

yum list --disablerepo=* | awk '/^yum-mirror/ { print $1}' -

Remove the mirror.

Replace Old-Mirror with the name of the Zenoss repository mirror returned in the previous substep:

yum remove Old-Mirror

-

-

Install the repository mirror.

yum install /tmp/yum-mirror-*.rpmThe yum command copies the contents of the RPM file to /opt/zenoss-repo-mirror.

-

Optional: Install the Zenoss PGP key, and then test the package files, if desired.

-

Move the Zenoss PGP key to the mirror directory.

mv /tmp/RPM-PGP-KEY-Zenoss /opt/zenoss-repo-mirror -

Install the key.

rpm --import /opt/zenoss-repo-mirror/RPM-PGP-KEY-Zenoss -

Test the repository mirror package file.

rpm -K /tmp/yum-mirror-*.rpmOn success, the result includes the file name and the following information:

(sha1) dsa sha1 md5 gpg OK -

Test the Control Center package file.

rpm -K /tmp/serviced-VERSION-1.x86_64.rpm

-

-

Optional: Update the configuration file of the Zenoss repository mirror to enable PGP key verification, if desired.

-

Open the repository mirror configuration file (/etc/yum.repos.d/zenoss-mirror.repo) with a text editor, and then add the following lines to the end of the file.

repo_gpgcheck=1 gpgkey=file:///opt/zenoss-repo-mirror/RPM-PGP-KEY-Zenoss -

Save the file, and then close the editor.

-

Update the yum metadata cache.

yum makecache fastThe cache update process includes the following prompt:

Retrieving key from file:///opt/zenoss-repo-mirror/RPM-PGP-KEY-Zenoss Importing GPG key 0xAA5A1AD7: Userid : "Zenoss, Inc. <dev@zenoss.com>" Fingerprint: f31f fd84 6a23 b3d5 981d a728 ed0a 5fd2 aa5a 1ad7 From : /opt/zenoss-repo-mirror/RPM-PGP-KEY-Zenoss Is this ok [y/N]:Enter y.

-

-

Move the Control Center package file to the mirror directory.

mv /tmp/serviced-VERSION-1.x86_64.rpm /opt/zenoss-repo-mirror -

Optional: Delete the mirror package file, if desired.

rm /tmp/yum-mirror-*.rpm

Stopping Control Center on the master host

Use this procedure to stop the Control Center service (serviced) on

the master host.

- Log in to the master host as root or as a user with superuser privileges.

-

Stop the top-level service

servicedis managing, if necessary.-

Show the status of running services.

serviced service statusThe top-level service is the service listed immediately below the headings line. - If the status of the top-level service and all child services is stopped, proceed to the next step. - If the status of the top-level service and all child services is not stopped, perform the remaining substeps.

-

Stop the top-level service.

serviced service stop Zenoss.resmgr -

Monitor the stop.

serviced service statusWhen the status of the top-level service and all child services is stopped, proceed to the next step.

-

-

Stop the Control Center service.

systemctl stop serviced -

Ensure that no containers remain in the local repository.

-

Display the identifiers of all containers, running and exited.

docker ps -qa- If the command returns no result, stop. This procedure is complete.

- If the command returns a result, perform the following substeps.

-

Remove all remaining containers.

docker ps -qa | xargs --no-run-if-empty docker rm -fv -

Display the identifiers of all containers, running and exited.

docker ps -qa- If the command returns no result, stop. This procedure is complete.

- If the command returns a result, perform the remaining substeps.

-

Disable the automatic startup of serviced.

systemctl disable serviced -

Reboot the host.

reboot -

Log in to the master host as root, or as a user with superuser privileges.

-

Enable the automatic startup of

serviced.systemctl enable serviced

-

Updating Docker 18.09.6 to 19.03.11

Use this procedure to update Docker to version 19.03.11.

-

Log in as root or as a user with superuser privileges.

-

Determine which version of Docker is installed.

docker info 2>/dev/null | awk '/^ Server/ { print $3 }'- If the result is 19.03.11, stop. This procedure is unnecessary; proceed to the next one.

- If the result is 18.09.06, perform the remaining steps in this procedure.

- If the result is any earlier version, proceed to Updating Control Center to release 1.8.0.

-

Update the operating system, if necessary.

-

Determine which release is installed.

cat /etc/redhat-release- If the result is greater than 7.2, proceed to the next step.

- If the result is less than or equal to 7.1, perform the remaining substeps.

-

Disable automatic start of

serviced.systemctl disable serviced -

Update the operating system, and then restart the host.

The following commands require internet access or a local mirror of operating system packages.

yum makecache fast && yum update --skip-broken && reboot -

Log in as root or as a user with superuser privileges.

-

Enable automatic start of

serviced.systemctl enable serviced

-

-

Update the Linux kernel, if necessary.

-

Determine which kernel version is installed.

uname -rIf the result is lower than 3.10.0-327.22.2.el7.x86_64, perform the following substeps.

-

Disable automatic start of serviced.

systemctl disable serviced -

Update the kernel, and then restart the host.

The following commands require internet access or a local mirror of operating system packages.

yum makecache fast && yum update kernel --skip-broken && reboot -

Log in as root, or as a user with superuser privileges.

-

Enable automatic start of

serviced.systemctl enable serviced

-

-

Stop the Docker service.

systemctl stop docker -

Remove Docker 18.09.06.

-

Remove without checking dependencies.

rpm -e --nodeps docker-ceThe result includes the following, informative message, which can be ignored:

/usr/bin/dockerd has not been configured as an alternative for dockerd -

Clean the

yumdatabases.yum clean all

-

-

Install Docker CE 19.03.11.

yum install --enablerepo=zenoss-mirror docker-ce-19.03.11-3.el7 docker-ce-cliIf yum returns an error due to dependency issues, see Resolving package dependency conflicts for potential resolutions.

-

Start the Docker service.

systemctl start docker

Loading image files

Use this procedure to load images into the local Docker registry on a host.

- Log in to the host as root or as a user with superuser privileges.

-

Change directory to /root.

cd /root -

Load the images.

for image in install-zenoss-*.run do /bin/echo -en "\nLoading $image..." yes | ./$image done -

List the images in the registry.

docker imagesThe result should show one image for each archive file.

-

Optional: Delete the archive files, if desired.

rm -i ./install-zenoss-*.run

Updating serviced on the master host for release 1.9.0

Use this procedure to update the serviced binary on the Control Center

master host. For delegate hosts, see

Updating serviced on delegate hosts for release 1.9.0.

In multi-host deployments, stop serviced on the master host first.

This procedure is longer and more complex than previous versions; please review the entire procedure before proceeding. In particular, it includes an optional migration of Resource Manager log data, which could take a long time. The migration is optional because Control Center does not require historical logs.

The serviced RPM includes a script that requires uid/gid 1001 for its

own use. If 1001 is already in use for another user/group, change it

temporarily before starting this procedure.

In testing, data migration speeds averaged about 1 megabyte per second. However, migration time is much longer and impossible to estimate when the file systems involved are mounted and the host is experiencing network performance issues. This procedure includes steps that help you estimate the amount of time required to migrate your log data.

To perform the optional log migration, you need

the migrate_cc_services_data.sh script, which is available to

customers on

delivery.zenoss.io.

If you are using the TMPDIR variable in /etc/default/serviced, you

must determine whether the specified path is mounted noexec before

proceeding. Step 5 provides a template for doing so.

Follow these steps:

-

Log in to the host as root or as a user with superuser privileges.

-

Start the

dockerservice, if necessary.systemctl is-active docker || systemctl start docker -

Disable SELinux temporarily, if necessary.

-

Determine the current mode.

sestatus | awk '/Current mode:/ { print $3 }'- If the result is not

enforcing, proceed to step 4. - If the result is

enforcing, perform the next substep.

- If the result is not

-

Disable SELinux temporarily.

setenforce 0

-

-

Copy the current

servicedconfiguration file to a backup and set the backup's permissions to read-only.cp /etc/default/serviced /etc/default/serviced-pre-1.9.0 chmod 0440 /etc/default/serviced-pre-1.9.0 -

Create a temporary directory, if necessary. The RPM installation process writes a script to

TMPand starts it withexecto migrate Elasticsearch.-

Determine whether the

/tmpdirectory is mounted on a partition with thenoexecoption set.awk '$2 ~ /\/tmp/ { if ($4 ~ /noexec/) print "protected" }' < /proc/mountsIf the command returns a result, perform the substeps. Otherwise, proceed to step 6.

-

Create a temporary storage variable for

TMP, if necessary.test -n "$TMP" && tempTMP=$TMP -

Create a temporary directory.

mkdir $HOME/tempdir && export TMP=$HOME/tempdir

-

-

Set variables for Control Center data paths.

myIsvcs=$(test -f /etc/default/serviced && grep -E '^[[:space:]]*SERVICED_ISVCS_PATH' /etc/default/serviced | cut -f2 -d= | tr -d \"\') myIsvcs=$(echo $myIsvcs | awk '{ print $NF }') export HOST_ISVCS_DIR="${myIsvcs:-/opt/serviced/var/isvcs}" myBackups=$(test -f /etc/default/serviced && grep -E '^[[:space:]]*SERVICED_BACKUPS_PATH' /etc/default/serviced | cut -f2 -d= | tr -d \"\') myBackups=$(echo $myBackups | awk '{ print $NF }') myBackups="${myBackups:-/opt/serviced/var/backups}" -

Prepare for log data migration. Note: Even if you are not planning to migrate log data, you must ensure space is available for a backup of the data.

-

Determine the size of the log data to migrate, if desired.

du -sh ${HOST_ISVCS_DIR}/elasticsearch-logstash | cut -f1The result includes a numeral and a units character (M for megabytes, G for gigabytes).

-

Use the result of the previous step to estimate the amount of time required to migrate log data. Under ideal conditions, migration speed is approximately 1 megabyte per second.

-

Determine the amount of space available in the backups directory.

du -sh ${myBackups} | cut -f1 -

Compare the size of the data to migrate (step 7a) with the amount of space available in the backups directory (step 7c). If the amount of space available is not at least 4 times greater than the size of the data to migrate, choose an alternate path that does have enough space, and then update the temporary variable:

myBackups=<AlternatePath>You may need to mount additional space on your host.

-

-

Set up variables and paths for storing backup copies of your historical data.

export HOST_LS_WORKING_DIR="${myBackups}/logstash" && mkdir -p $HOST_LS_WORKING_DIR export HOST_BACKUP_DIR="${myBackups}/serviced" && mkdir -p $HOST_BACKUP_DIR -

Install the new

servicedRPM package. The installation includes a migration of internal services data, which takes some extra time.yum install --enablerepo=zenoss-mirror /opt/zenoss-repo-mirror/serviced-1.9.0-1.x86_64.rpmIf

yumreturns an error due to dependency issues, see Resolving package dependency conflicts for potential resolutions. -

(Optional) Migrate historical log data, if desired. Reminder: After the upgrade is complete and

servicedis restarted, create an Elasticsearch index for the migrated data.<PathToScript>/migrate_cc_services_data.shThe script does not complete when messages similar to the following are displayed for a long time:

2021-05-12 15:30:52,967 [INFO] >>> Documents processed: 11680777/24441034; current speed 0 doc's/sec <<< 2021-05-12 15:31:12,971 [INFO] GET http://127.0.0.1:9101/_cat/count?h=count [status:200 request:0.003s] |XXXXXXXXXXXXXXXXXXXXXXX---------------------------| 47.000% 2021-05-12 15:31:12,971 [INFO] >>> Documents processed: 11680777/24441034; current speed 0 doc's/sec <<< 2021-05-12 15:31:32,975 [INFO] GET http://127.0.0.1:9101/_cat/count?h=count [status:200 request:0.003s] |XXXXXXXXXXXXXXXXXXXXXXX---------------------------| 47.000% 2021-05-12 15:31:32,975 [INFO] >>> Documents processed: 11680777/24441034; current speed 0 doc's/sec <<< 2021-05-12 15:31:52,983 [INFO] GET http://127.0.0.1:9101/_cat/count?h=count [status:200 request:0.004s]To work around this issue, perform the following substeps:

-

Log in to a new terminal session on the Control Center master host as root or as a user with superuser privileges.

-

Stop all running containers.

docker container stop $(docker container list -q) -

Stop the Elasticsearch migration process.

kill $(ps aux | awk '/[e]s_cluster.ini/ { print $2 }') -

Exit the new terminal session and return to the original session.

-

Verify that the required environment variables are set.

env | grep -E 'HOST_(BACKUP|ISVCS|LS_WORKING)_DIR'If the command returns no result, return to steps 7 and 8 to reset the HOST_BACKUP_DIR, HOST_ISVCS_DIR, and HOST_LS_WORKING_DIR variables.

-

Delete the update attempt.

pushd ${HOST_LS_WORKING_DIR} rm -rf ./elasticsearch-logstash-new pushd ${HOST_ISVCS_DIR} rm -rf ./elasticsearch-logstash popd -

Restore the backup file.

pushd ${HOST_BACKUP_DIR} cp -ar elasticsearch-logstash.backup ${HOST_ISVCS_DIR}/elasticsearch-logstash -

Return to step 10 and start the migration script again.

-

-

Enable SELinux, if necessary. Perform this step only if you disabled SELinux in step 3:

setenforce 1 -

Restore the previous value of

TMP, if necessary.test -n "$tempTMP" && export TMP=$tempTMP -

Make a backup copy of the new configuration file and set permissions to read-only.

cp /etc/default/serviced /etc/default/serviced-1.9.0-orig chmod 0440 /etc/default/serviced-1.9.0-orig -

Compare the new configuration file with the configuration file of the previous release.

-

Identify the configuration files to compare.

ls -l /etc/default/serviced*The original versions of the configuration files should end with

orig, but you may have to compare the dates of the files. -

Compare the new and previous configuration files.Replace New-Version with the name of the new configuration file, and replace Previous-Version with the name of the previous configuration file:

diff New-Version Previous-VersionFor example, to compare versions 1.9.0 and the most recent version, enter the following command:

diff /etc/default/serviced-1.8.0-orig /etc/default/serviced-1.9.0-orig -

If the command returns no result, restore the backup of the previous configuration file.

cp /etc/default/serviced-pre-1.9.0 /etc/default/serviced && chmod 0644 /etc/default/serviced -

If the command returns a result, restore the backup of the previous configuration file, and then optionally, use the results to edit the restored version.

cp /etc/default/serviced-pre-1.9.0 /etc/default/serviced && chmod 0644 /etc/default/servicedFor more information about configuring a host, see Configuration variables.

-

-

Reload the

systemdmanager configuration.systemctl daemon-reload

Optional: Installing a security certificate

The default, insecure certificate that Control Center uses for

TLS-encrypted communications are based on a public certificate compiled

into serviced. Use this procedure to replace the default certificate

files with your own files.

- If you are using virtual host public endpoints for your Zenoss Service Dynamics deployment, you need a wildcard certificate or a subject alternative name (SAN) certificate.

- If your end users access the browser interface through a reverse proxy, the reverse proxy may provide the browser with its own SSL certificate. If so, please contact Zenoss Support for additional assistance.

To perform this procedure, you need valid certificate files. For information about generating a self-signed certificate, see Creating a self-signed security certificate.

To use your own certificate files, perform this procedure on the Control Center master host and on each Control Center delegate host in your environment.

Follow these steps:

-

Log in to the host as root or as a user with superuser privileges.

-

Use a secure copy program to copy the key and certificate files to

/tmp. -

Move the key file to the

/etc/pki/tls/privatedirectory. Replace<KEY_FILE>with the name of your key file:mv /tmp/<KEY_FILE>.key /etc/pki/tls/private -

Move the certificate file to the

/etc/pki/tls/certsdirectory. Replace<CERT_FILE>with the name of your certificate file:mv /tmp/<CERT_FILE>.crt /etc/pki/tls/certs -

Updates only: Create a backup copy of the Control Center configuration file. Do not perform this step for a fresh install:

cp /etc/default/serviced /etc/default/serviced.before-cert-files -

Edit the Control Center configuration file.

- Open

/etc/default/servicedin a text editor. - Locate the line for the SERVICED_KEY_FILE variable, and then make a copy of the line, immediately below the original.

- Remove the number sign character (

#) from the beginning of the line. - Replace the default value with the full pathname of your key file.

- Locate the line for the SERVICED_CERT_FILE variable, and then make a copy of the line, immediately below the original.

- Remove the number sign character (

#) from the beginning of the line. - Replace the default value with the full pathname of your certificate file.

- Save the file, and then close the editor.

- Open

-

Verify the settings in the configuration file.

grep -E '^[[:space:]]*[A-Z_]+' /etc/default/serviced -

Updates only: Reload the

systemdmanager configuration. Do not perform this step for a fresh install:systemctl daemon-reload

Update the delegate hosts

Installing the repository mirror

Use this procedure to install the Zenoss repository mirror on a Control Center host. The mirror contains packages that are required on all Control Center hosts. Repeat this procedure on each host in your deployment.

-

Log in to the target host as root or as a user with superuser privileges.

-

Move the RPM files and the Zenoss PGP key file to /tmp.

-

Upgrades only: Remove the existing repository mirror, if necessary.

-

Search for the mirror.

yum list --disablerepo=* | awk '/^yum-mirror/ { print $1}' -

Remove the mirror.

Replace Old-Mirror with the name of the Zenoss repository mirror returned in the previous substep:

yum remove Old-Mirror

-

-

Install the repository mirror.

yum install /tmp/yum-mirror-*.rpmThe yum command copies the contents of the RPM file to /opt/zenoss-repo-mirror.

-

Optional: Install the Zenoss PGP key, and then test the package files, if desired.

-

Move the Zenoss PGP key to the mirror directory.

mv /tmp/RPM-PGP-KEY-Zenoss /opt/zenoss-repo-mirror -

Install the key.

rpm --import /opt/zenoss-repo-mirror/RPM-PGP-KEY-Zenoss -

Test the repository mirror package file.

rpm -K /tmp/yum-mirror-*.rpmOn success, the result includes the file name and the following information:

(sha1) dsa sha1 md5 gpg OK -

Test the Control Center package file.

rpm -K /tmp/serviced-VERSION-1.x86_64.rpm

-

-

Optional: Update the configuration file of the Zenoss repository mirror to enable PGP key verification, if desired.

-

Open the repository mirror configuration file (/etc/yum.repos.d/zenoss-mirror.repo) with a text editor, and then add the following lines to the end of the file.

repo_gpgcheck=1 gpgkey=file:///opt/zenoss-repo-mirror/RPM-PGP-KEY-Zenoss -

Save the file, and then close the editor.

-

Update the yum metadata cache.

yum makecache fastThe cache update process includes the following prompt:

Retrieving key from file:///opt/zenoss-repo-mirror/RPM-PGP-KEY-Zenoss Importing GPG key 0xAA5A1AD7: Userid : "Zenoss, Inc. <dev@zenoss.com>" Fingerprint: f31f fd84 6a23 b3d5 981d a728 ed0a 5fd2 aa5a 1ad7 From : /opt/zenoss-repo-mirror/RPM-PGP-KEY-Zenoss Is this ok [y/N]:Enter y.

-

-

Move the Control Center package file to the mirror directory.

mv /tmp/serviced-VERSION-1.x86_64.rpm /opt/zenoss-repo-mirror -

Optional: Delete the mirror package file, if desired.

rm /tmp/yum-mirror-*.rpm

Stopping Control Center on a delegate host

Use this procedure to stop the Control Center service (serviced) on a

delegate host in a multi-host deployment. Repeat this procedure on each

delegate host in your deployment.

Before performing this procedure on any delegate host, stop Control Center on the master host.

- Log in to the delegate host as root or as a user with superuser privileges.

-

Stop the Control Center service.

systemctl stop serviced -

Ensure that no containers remain in the local repository.

-

Display the identifiers of all containers, running and exited.

docker ps -qa- If the command returns no result, proceed to the next step.

- If the command returns a result, perform the following substeps.

-

Remove all remaining containers.

docker ps -qa | xargs --no-run-if-empty docker rm -fv- If the remove command completes, proceed to the next step.

- If the remove command does not complete, the most likely cause is an NFS conflict. Perform the following substeps.

-

Stop the NFS and Docker services.

systemctl stop nfs && systemctl stop docker -

Start the NFS and Docker services.

systemctl start nfs && systemctl start docker -

Repeat the attempt to remove all remaining containers.

docker ps -qa | xargs --no-run-if-empty docker rm -fv- If the remove command completes, proceed to the next step.

- If the remove command does not complete, perform the remaining substeps.

-

Disable the automatic startup of serviced.

systemctl disable serviced -

Reboot the host.

reboot -

Log in to the delegate host as root, or as a user with superuser privileges.

-

Enable the automatic startup of serviced.

systemctl enable serviced

-

-

Dismount all filesystems mounted from the Control Center master host.

This step ensures no stale mounts remain when the storage on the master host is replaced.

-

Identify filesystems mounted from the master host.

awk '/serviced/ { print $1, $2 }' < /proc/mounts | grep -v '/opt/serviced/var/isvcs'- If the preceding command returns no result, stop. This procedure is complete.

- If the preceding command returns a result, perform the following substeps.

-

Force the filesystems to dismount.

for FS in $(awk '/serviced/ { print $2 }' < /proc/mounts | grep -v '/opt/serviced/var/isvcs') do umount -f $FS done -

Identify filesystems mounted from the master host.

awk '/serviced/ { print $1, $2 }' < /proc/mounts | grep -v '/opt/serviced/var/isvcs'- If the preceding command returns no result, stop. This procedure is complete.

- If the preceding command returns a result, perform the following substeps.

-

Perform a lazy dismount.

for FS in $(awk '/serviced/ { print $2 }' < /proc/mounts | grep -v '/opt/serviced/var/isvcs') do umount -f -l $FS done -

Restart the NFS service.

systemctl restart nfs -

Determine whether any filesystems remain mounted from the master host.

awk '/serviced/ { print $1, $2 }' < /proc/mounts | grep -v '/opt/serviced/var/isvcs'- If the preceding command returns no result, stop. This procedure is complete.

- If the preceding command returns a result, perform the remaining substeps.

-

Disable the automatic startup of serviced.

systemctl disable serviced -

Reboot the host.

reboot -

Log in to the delegate host as root, or as a user with superuser privileges.

-

Enable the automatic startup of serviced.

systemctl enable serviced

-

Updating Docker 18.09.6 to 19.03.11

Use this procedure to update Docker to version 19.03.11.

-

Log in as root or as a user with superuser privileges.

-

Determine which version of Docker is installed.

docker info 2>/dev/null | awk '/^ Server/ { print $3 }'- If the result is 19.03.11, stop. This procedure is unnecessary; proceed to the next one.

- If the result is 18.09.06, perform the remaining steps in this procedure.

- If the result is any earlier version, proceed to Updating Control Center to release 1.8.0.

-

Update the operating system, if necessary.

-

Determine which release is installed.

cat /etc/redhat-release- If the result is greater than 7.2, proceed to the next step.

- If the result is less than or equal to 7.1, perform the remaining substeps.

-

Disable automatic start of

serviced.systemctl disable serviced -

Update the operating system, and then restart the host.

The following commands require internet access or a local mirror of operating system packages.

yum makecache fast && yum update --skip-broken && reboot -

Log in as root or as a user with superuser privileges.

-

Enable automatic start of

serviced.systemctl enable serviced

-

-

Update the Linux kernel, if necessary.

-

Determine which kernel version is installed.

uname -rIf the result is lower than 3.10.0-327.22.2.el7.x86_64, perform the following substeps.

-

Disable automatic start of serviced.

systemctl disable serviced -

Update the kernel, and then restart the host.

The following commands require internet access or a local mirror of operating system packages.

yum makecache fast && yum update kernel --skip-broken && reboot -

Log in as root, or as a user with superuser privileges.

-

Enable automatic start of

serviced.systemctl enable serviced

-

-

Stop the Docker service.

systemctl stop docker -

Remove Docker 18.09.06.

-

Remove without checking dependencies.

rpm -e --nodeps docker-ceThe result includes the following, informative message, which can be ignored:

/usr/bin/dockerd has not been configured as an alternative for dockerd -

Clean the

yumdatabases.yum clean all

-

-

Install Docker CE 19.03.11.

yum install --enablerepo=zenoss-mirror docker-ce-19.03.11-3.el7 docker-ce-cliIf yum returns an error due to dependency issues, see Resolving package dependency conflicts for potential resolutions.

-

Start the Docker service.

systemctl start docker

Updating serviced on delegate hosts for release 1.9.0

Use this procedure to update the serviced binary on Control

Center delegate hosts. Perform this procedure on each delegate host in

your Control Center deployment. For the master host, see

Updating serviced on the master host for release 1.9.0.

-

Log in to the host as root or as a user with superuser privileges.

-

Start the

dockerservice, if necessary.systemctl is-active docker || systemctl start docker -

Disable SELinux temporarily, if necessary.

-

Determine the current mode.

sestatus | awk '/Current mode:/ { print $3 }'- If the result is not

enforcing, proceed to step 4. - If the result is

enforcing, perform the next substep.

- If the result is not

-

Disable SELinux temporarily.

setenforce 0

-

-

Save the current

servicedconfiguration file as a reference and set permissions to read-only.cp /etc/default/serviced /etc/default/serviced-pre-1.9.0 chmod 0440 /etc/default/serviced-pre-1.9.0 -

Create a temporary directory, if necessary. The RPM installation process writes a script to

TMPand starts it withexecto migrate Elasticsearch.-

Determine whether the

/tmpdirectory is mounted on a partition with thenoexecoption set.awk '$2 ~ /\/tmp/ { if ($4 ~ /noexec/) print "protected" }' < /proc/mountsIf the command returns a result, perform the following substeps. Otherwise, proceed to step 5.

-

Create a temporary storage variable for

TMP, if necessary.test -n "$TMP" && tempTMP=$TMP -

Create a temporary directory.

mkdir $HOME/tempdir && export TMP=$HOME/tempdir

-

-

Install the new

servicedRPM package.yum install --enablerepo=zenoss-mirror /opt/zenoss-repo-mirror/serviced-1.9.0-1.x86_64.rpmIf

yumreturns an error due to dependency issues, see Resolving package dependency conflicts for potential resolutions. On delegate hosts, the following error messages are expected and can be ignored:===== FAILURE ===== Creating a backup of elasticsearch-logstash data failed =================== warning: %post(serviced-1.9.0-0.0.479.unstable.x86_64) scriptlet failed, exit status 1 -

Enable SELinux, if necessary. Perform this step only if you disabled SELinux in step 3:

setenforce 1 -

Restore the previous value of

TMP, if necessary.test -n "$tempTMP" && export TMP=$tempTMP -

Make a backup copy of the new configuration file and set permissions to read-only.

cp /etc/default/serviced /etc/default/serviced-1.9.0-orig chmod 0440 /etc/default/serviced-1.9.0-orig -

Compare the new configuration file with the configuration file of the previous release.

-

Identify the configuration files to compare.

ls -l /etc/default/serviced*The original versions of the configuration files should end with

orig, but you may have to compare the dates of the files. -

Compare the new and previous configuration files. Replace New-Version with the name of the new configuration file, and replace Previous-Version with the name of the previous configuration file:

diff New-Version Previous-VersionFor example, to compare versions 1.8.0 and the most recent version, enter the following command:

diff /etc/default/serviced-1.8.0-orig /etc/default/serviced-1.9.0-orig -

If the command returns no result, restore the backup of the previous configuration file.

cp /etc/default/serviced-pre-1.9.0 /etc/default/serviced && chmod 0644 /etc/default/serviced -

If the command returns a result, restore the backup of the previous configuration file, and then optionally, use the results to edit the restored version.

cp /etc/default/serviced-pre-1.9.0 /etc/default/serviced && chmod 0644 /etc/default/servicedFor more information about configuring a host, see Configuration variables.

-

-

Reload the

systemdmanager configuration.systemctl daemon-reload

Optional: Installing a security certificate

The default, insecure certificate that Control Center uses for

TLS-encrypted communications are based on a public certificate compiled

into serviced. Use this procedure to replace the default certificate

files with your own files.

- If you are using virtual host public endpoints for your Zenoss Service Dynamics deployment, you need a wildcard certificate or a subject alternative name (SAN) certificate.

- If your end users access the browser interface through a reverse proxy, the reverse proxy may provide the browser with its own SSL certificate. If so, please contact Zenoss Support for additional assistance.

To perform this procedure, you need valid certificate files. For information about generating a self-signed certificate, see Creating a self-signed security certificate.

To use your own certificate files, perform this procedure on the Control Center master host and on each Control Center delegate host in your environment.

Follow these steps:

-

Log in to the host as root or as a user with superuser privileges.

-

Use a secure copy program to copy the key and certificate files to

/tmp. -

Move the key file to the

/etc/pki/tls/privatedirectory. Replace<KEY_FILE>with the name of your key file:mv /tmp/<KEY_FILE>.key /etc/pki/tls/private -

Move the certificate file to the

/etc/pki/tls/certsdirectory. Replace<CERT_FILE>with the name of your certificate file:mv /tmp/<CERT_FILE>.crt /etc/pki/tls/certs -

Updates only: Create a backup copy of the Control Center configuration file. Do not perform this step for a fresh install:

cp /etc/default/serviced /etc/default/serviced.before-cert-files -

Edit the Control Center configuration file.

- Open

/etc/default/servicedin a text editor. - Locate the line for the SERVICED_KEY_FILE variable, and then make a copy of the line, immediately below the original.

- Remove the number sign character (

#) from the beginning of the line. - Replace the default value with the full pathname of your key file.

- Locate the line for the SERVICED_CERT_FILE variable, and then make a copy of the line, immediately below the original.

- Remove the number sign character (

#) from the beginning of the line. - Replace the default value with the full pathname of your certificate file.

- Save the file, and then close the editor.

- Open

-

Verify the settings in the configuration file.

grep -E '^[[:space:]]*[A-Z_]+' /etc/default/serviced -

Updates only: Reload the

systemdmanager configuration. Do not perform this step for a fresh install:systemctl daemon-reload

Updating the ZooKeeper image on ensemble nodes

Use this procedure to install a new Docker image for ZooKeeper on ZooKeeper ensemble nodes.

- Log in to the master host as root or as a user with superuser privileges.

-

Identify the hosts in the ZooKeeper ensemble.

grep -E '^[[:space:]]*SERVICED_ZK=' /etc/default/servicedThe result is a list of 3 or 5 hosts, separated by the comma character (,). The master host is always a node in the ZooKeeper ensemble.

-

Log in to a ZooKeeper ensemble node as root, or as a user with superuser privileges.

-

Change directory to /root.

cd /root -

Extract the ZooKeeper image.

yes | ./install-zenoss-isvcs-zookeeper_v*.run -

Optional: Delete the archive file.

rm -i ./install-zenoss-isvcs-zookeeper_v*.run -

Repeat the preceding four steps on each delegate that is a node in the ZooKeeper ensemble.

Start Control Center

There are separate start-up procedures for single- and multi-host deployments of Control Center. Please use the procedure appropriate to your deployment.

Starting Control Center (single-host deployment)

Use this procedure to start Control Center in a single-host deployment. The default configuration of the Control Center service (serviced) is to start when the host starts. This procedure is only needed after stopping serviced to perform maintenance tasks.

- Log in to the master host as root or as a user with superuser privileges.

-

Enable

serviced, if necessary.systemctl is-enabled serviced || systemctl enable serviced -

Start the Control Center service.

systemctl start serviced -

Optional: Monitor the startup, if desired.

journalctl -u serviced -f -o cat

Starting Control Center (multi-host deployment)

Use this procedure to start Control Center in a multi-host deployment. The default configuration of the Control Center service (serviced) is to start when the host starts. This procedure is only needed after stopping serviced to perform maintenance tasks.

- Log in to the master host as root or as a user with superuser privileges.

-

Enable

serviced, if necessary.systemctl is-enabled serviced || systemctl enable serviced -

Identify the hosts in the ZooKeeper ensemble.

grep -E '^[[:space:]]*SERVICED_ZK=' /etc/default/servicedThe result is a list of 1, 3, or 5 hosts, separated by the comma character (,). The master host is always a node in the ZooKeeper ensemble.

-

In separate windows, log in to each of the delegate hosts that are nodes in the ZooKeeper ensemble as root, or as a user with superuser privileges.

-

On all ensemble hosts, start serviced.

The window of time for starting a ZooKeeper ensemble is relatively short. The goal of this step is to start Control Center on each ensemble node at about the same time, so that each node can participate in electing the leader.

systemctl start serviced -

On the master host, check the status of the ZooKeeper ensemble.

-

Attach to the container of the ZooKeeper service.

docker exec -it serviced-isvcs_zookeeper bash -

Query the master host and identify its role in the ensemble.

Replace Master with the hostname or IP address of the master host:

{ echo stats; sleep 1; } | nc Master 2181 | grep ModeThe result includes leader or follower. When multiple hosts rely on the ZooKeeper instance on the master host, the result includes standalone.

-

Query the other delegate hosts to identify their role in the ensemble.

Replace Delegate with the hostname or IP address of a delegate host:

{ echo stats; sleep 1; } | nc Delegate 2181 | grep Mode -

Detach from the container of the ZooKeeper service.

exit

If none of the nodes reports that it is the ensemble leader within a few minutes of starting serviced, reboot the ensemble hosts.

-

-

Log in to each of the delegate hosts that are not nodes in the ZooKeeper ensemble as root, or as a user with superuser privileges, and then start serviced.

systemctl start serviced -

Optional: Monitor the startup, if desired.

journalctl -u serviced -f -o cat

Post-upgrade procedures

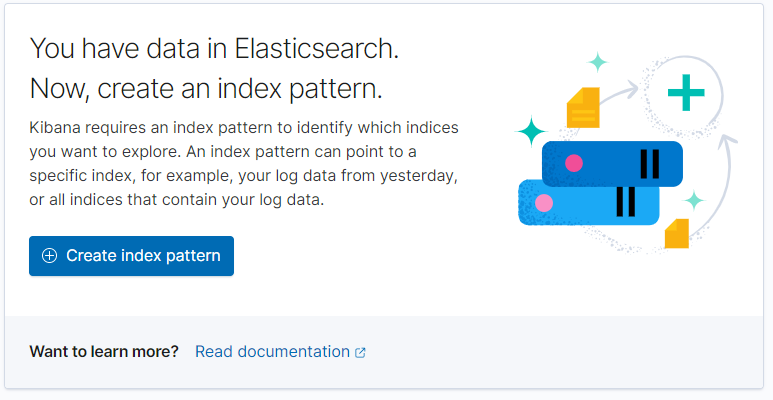

Optional: Creating an Elasticsearch index pattern

Use this procedure to create an Elasticsearch index pattern for the

Resource Manager log data that is managed by Control Center. This

procedure is necessary only if you chose to migrate historical log data

when you updated serviced on the master host.

-

Log in to the Control Center browser interface.

-

At the top of the page, select Logs.

-

In the Kibana window, select Create index pattern.

-

In the Index pattern name field, enter the following text:

logstash-* -

Select Next step.

-

From the menu in the Time pattern field, select

@timestamp. -

Select Show advanced settings.

-

In the Custom index pattern ID field, enter the following text:

logstash-* -

Select Create index pattern.

-

Close the Kibana window.

Removing unused images

Use this procedure to identify and remove unused Control Center images.

- Log in to the master host as root or as a user with superuser privileges.

-

Identify the images associated with the installed version of serviced.

serviced version | grep ImagesExample result:

IsvcsImages: [zenoss/serviced-isvcs:v73 zenoss/isvcs-zookeeper:v16] -

Start Docker, if necessary.

systemctl status docker || systemctl start docker -

Display the serviced images in the local repository.

docker images | awk '/REPO|isvcs/'Example result (edited to fit):

REPOSITORY TAG IMAGE ID zenoss/serviced-isvcs v73 0bd933b3fb2f zenoss/serviced-isvcs v70 c19f1e317158 zenoss/isvcs-zookeeper v16 7ea8c92ca1ad zenoss/isvcs-zookeeper v14 0ff3b3117fb8The example result shows the current versions and one set of previous versions. Your result may include additional previous versions and will show different images IDs.

-

Remove unused images.

Replace Image-ID with the image ID of an image for a previous version.

docker rmi Image-IDRepeat this command for each unused image.

Optional: Adding ZooKeeper security to an existing deployment

Use this procedure to secure Zookeeper in an existing Control Center deployment. For more information, see Zookeeper security.

- Set the Control Center variables for Zookeeper security.

- Stop Control Center on the master host and all delegate hosts.

-

On each Control Center host, remove the Zookeeper data directory.

-

Log in to the host as root or as a user with superuser privileges.

-

Remove the directory.

rm -rf --preserve-root /opt/serviced/var/iscvs/zookeeper

-

- Optional: Secure Zookeeper data nodes.