Operating system requirements

Control Center has been tested with the 64-bit version of the following Linux distributions:

| Operating System | Version |

|---|---|

| CentOS | 7.9 |

| AlmaLinux / Rocky Linux | 8.10 9.4 |

| Red Hat Enterprise Linux (RHEL) | 7.9 8.10 9.4 |

Kernel versions 3.10.0-327.22.2.el7.x86_64 and higher are tested. For best performance, keep the kernel up-to-date.

All hosts in a Control Center deployment must have identical Linux distributions, versions, and kernels installed.

The supported distributions provide a variety of server configurations. Control Center is tested on operating system platforms that are installed and configured with standard options. Docker and Control Center are tested on the Minimal Install configuration with the NFS and Network Time Protocol (NTP) packages installed.

The locale setting on all hosts must be en_US.UTF-8. Other settings

are not supported.

Control Center relies on the system clock to synchronize its actions. The installation procedures include optional steps to add the NTP daemon to all hosts. By default, the NTP daemon synchronizes the system clock by communicating with standard time servers available on the internet. Use the default configuration or configure the daemon to use a time server in your environment.

Warning

Because Control Center relies on the system clock, while an application is running, do not pause a virtual machine that is running Control Center.

Networking

On startup, Docker creates the docker0 virtual interface and selects an unused IP address and subnet (typically, 172.17.0.1/16) to assign to the interface. The virtual interface is used as a virtual Ethernet bridge, and automatically forwards packets among real and virtual interfaces attached to it. The host and all of its containers communicate through this virtual bridge.

Docker can only check directly connected routes, so the subnet it chooses for the virtual bridge might be inappropriate for your environment. To customize the virtual bridge subnet, refer to Docker's advanced network configuration article.

The following list highlights potential communication conflicts:

-

If you use a firewall utility, ensure that it does not conflict with Docker. The default configurations of firewall utilities such as FirewallD include rules that can conflict with Docker, and therefore Control Center. The following interactions illustrate the conflicts:

- The firewalld daemon removes the DOCKER chain from iptables when it starts or restarts.

- Under systemd, firewalld is started before Docker. However, if you start or restart firewalld while Docker is running, you must restart Docker.

-

Even if you do not use a firewall utility, your firewall settings might still prevent communications over the Docker virtual bridge. This issue occurs when iptables INPUT rules restrict most traffic. To ensure that the bridge works properly, append an INPUT rule to your iptables configuration that allows traffic on the bridge subnet. For example, if docker0 is bound to 172.17.42.1/16, then a command like the following example would ensure that the bridge works.

Note: Before modifying your iptables configuration, consult your networking specialist.

iptables -A INPUT -d 172.17.0.0/16 -j ACCEPT

iptable_nat on RHEL 8+

The kernel module iptable_nat is required by Hbase. It is not installed by default on all versions of RHEL 8. Check that it is installed by following the instructions here.

Additional requirements and considerations

Control Center requires a 16-bit, private IPv4 network for virtual IP addresses. The default network is 10.3/16, but during installation you can select any valid IPv4 16-bit address space.

Before installation, add DNS entries for the Control Center master host and all delegate hosts. Verify that all hosts in Control Center resource pools can

- Resolve the hostnames of all other delegate hosts to IPv4 addresses. For example, if the public IP address of your host is 192.0.2.1, then the hostname -i command should return 192.0.2.1.

- Respond with an IPv4 address other than 127.x.x.x when ping Hostname is invoked.

- Return a unique result from the hostid command.

Control Center relies on Network File System (NFS) for its distributed file system implementation. Therefore, Control Center hosts cannot run a general-purpose NFS server, and all Control Center hosts require NFS.

Warning

Disabling IPv6 can prevent the NFS server from restarting, due to an rpcbind issue. Zenoss recommends leaving IPv6 enabled on the Control Center master host.

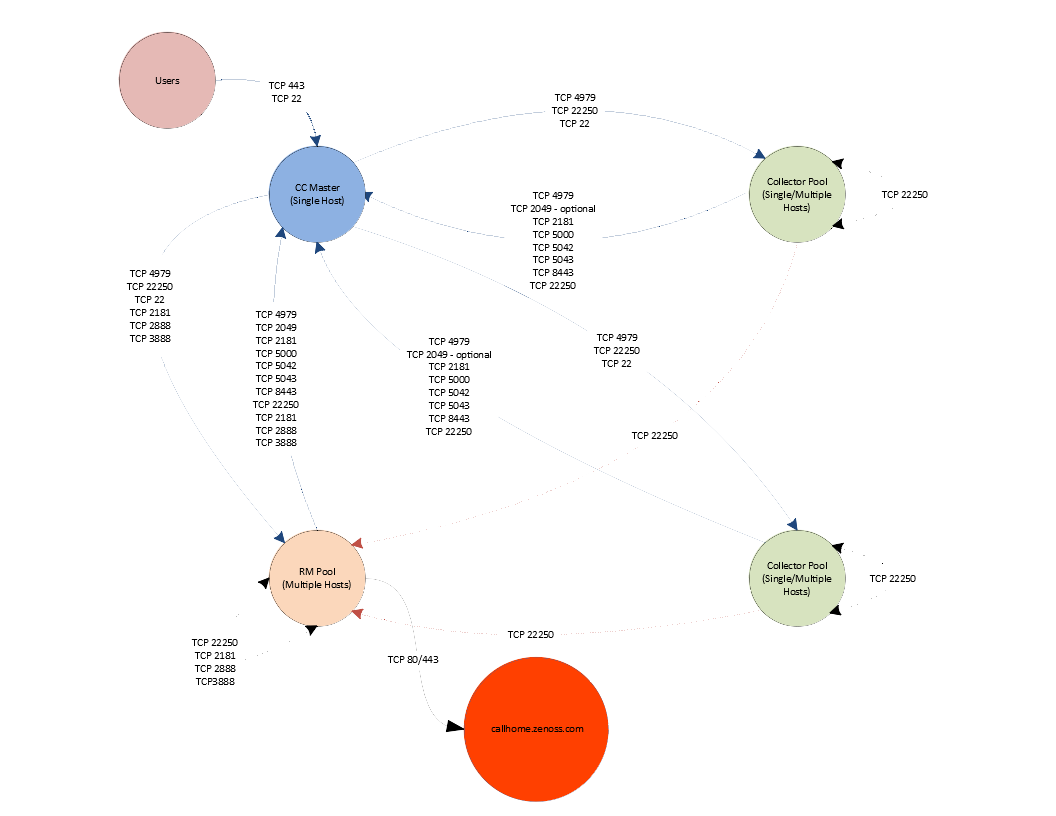

Zenoss Callhome

Hosts in the Resource Manager pool require outbound access to

callhome.zenoss.com (TCP 80/443) in order to relay generic licensing

utilization statistics about your environment.

If you have deployed Resource Manager in a non-standard fashion, the

pool where the zenmwd service runs will require outbound TCP

80/443 access to callhome.zenoss.com. More information on the data

sent via this process can be found in the Appendix.

Security

During installation, Control Center has no knowledge of Resource Manager port requirements, so the installation procedure includes disabling the firewall. After both Control Center and Resource Manager are installed, you can close unused ports.

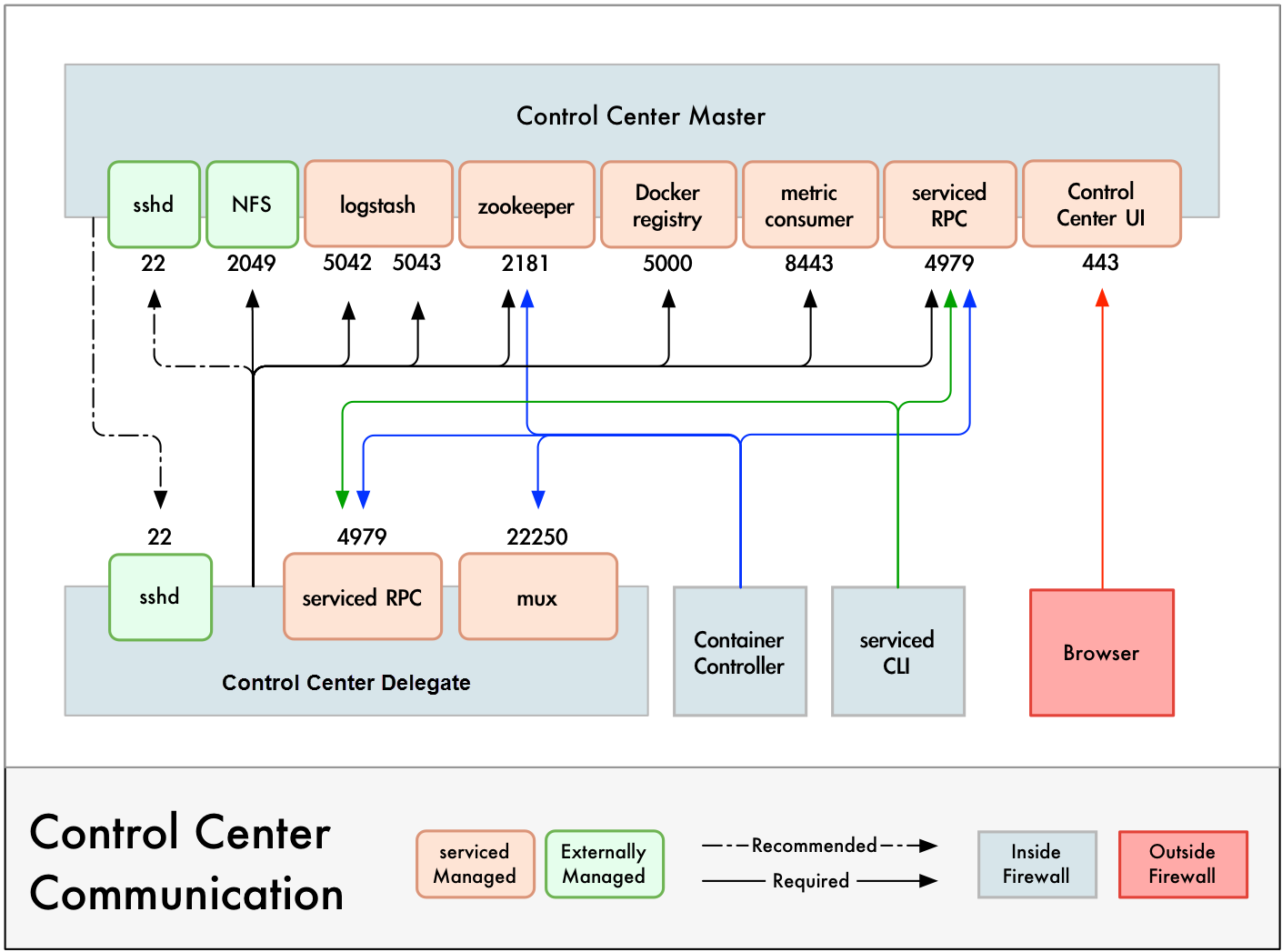

Control Center includes a virtual multiplexer (mux) that performs the following functions:

- Aggregates the UDP and TCP traffic among the services it manages. The aggregation is opaque to services, and mux traffic is encrypted when it travels among containers on remote hosts. (Traffic among containers on the same host is not encrypted.)

- Along with the distributed file system, enables Control Center to quickly deploy services to any pool host.

- Reduces the number of open ports required on a Control Center host to a predictable set.

The following figure identifies the ports that Control Center requires. All traffic is TCP. Except for port 4979, all ports are configurable.

Control Center relies on the system clock to synchronize its actions,

and indirectly, ntpd or chrony to synchronize clocks among multiple

hosts. In the default configuration of ntpd, the firewalls of master

and delegate hosts must support an incoming UDP connection on port 123.

For more information about port requirements, see Resource Manager network ports.

Additional requirements and considerations

- To install Control Center, you must log in as root, or as a user with superuser privileges.

- Access to the Control Center browser interface requires a login account on the Control Center master host. Pluggable Authentication Modules (PAM) is tested. By default, users must be members of the wheel group. The default group may be changed by setting the SERVICED_ADMIN_GROUP variable, and the replacement group does not need superuser privileges.

- The serviced startup script sets the hard and soft open files limit to 1048576. The script does not modify the /etc/sysconfig/limits.conf file.

- Control Center has been tested with Security Enhanced Linux enabled.

Resource Manager network ports

This section includes a network diagram of a Resource Manager deployment featuring four Control Center resource pools:

- One pool for the Control Center master host.

- One pool for most of the Resource Manager services.

- Two pools for Resource Manager collector services (collector pools).